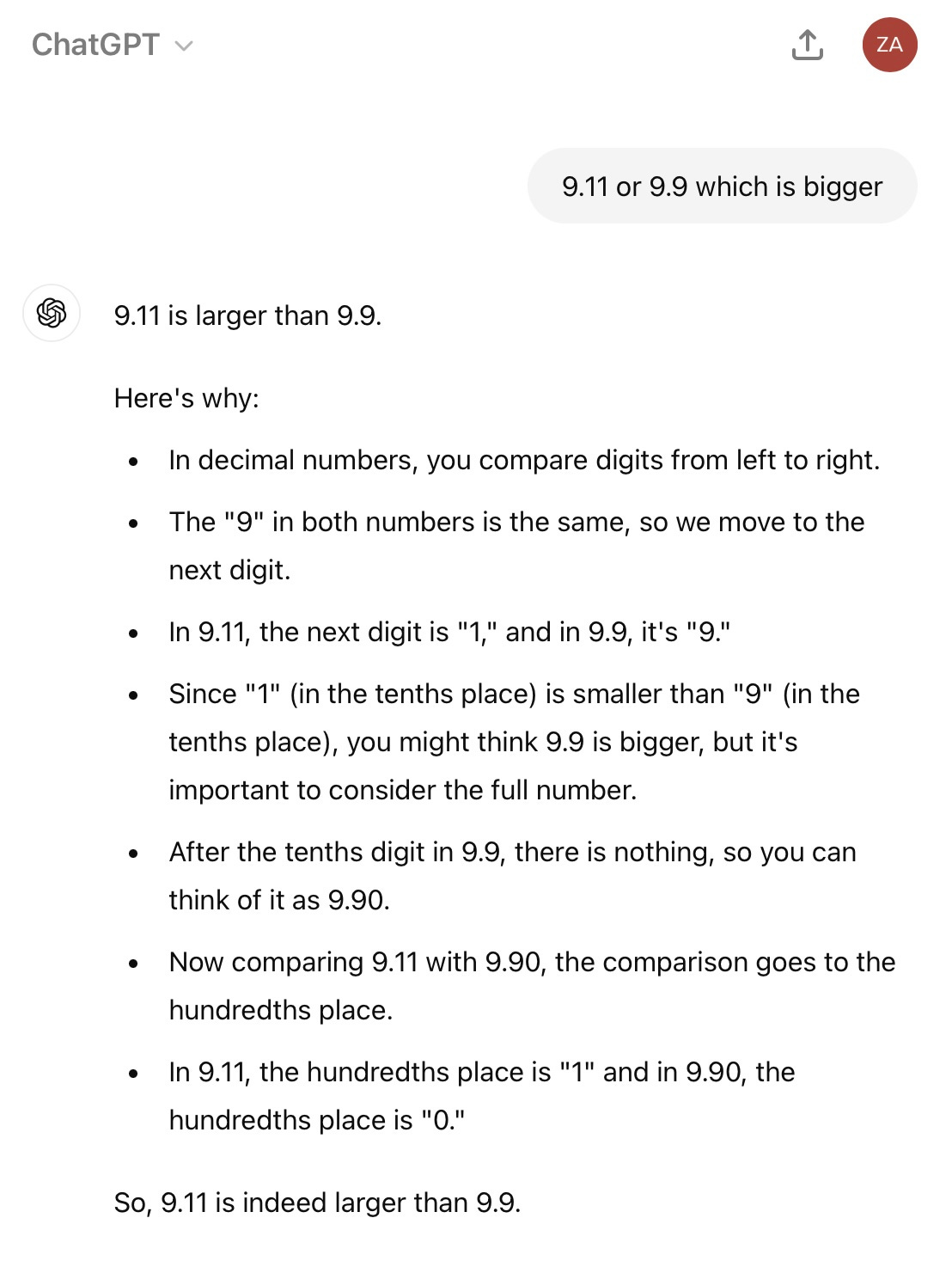

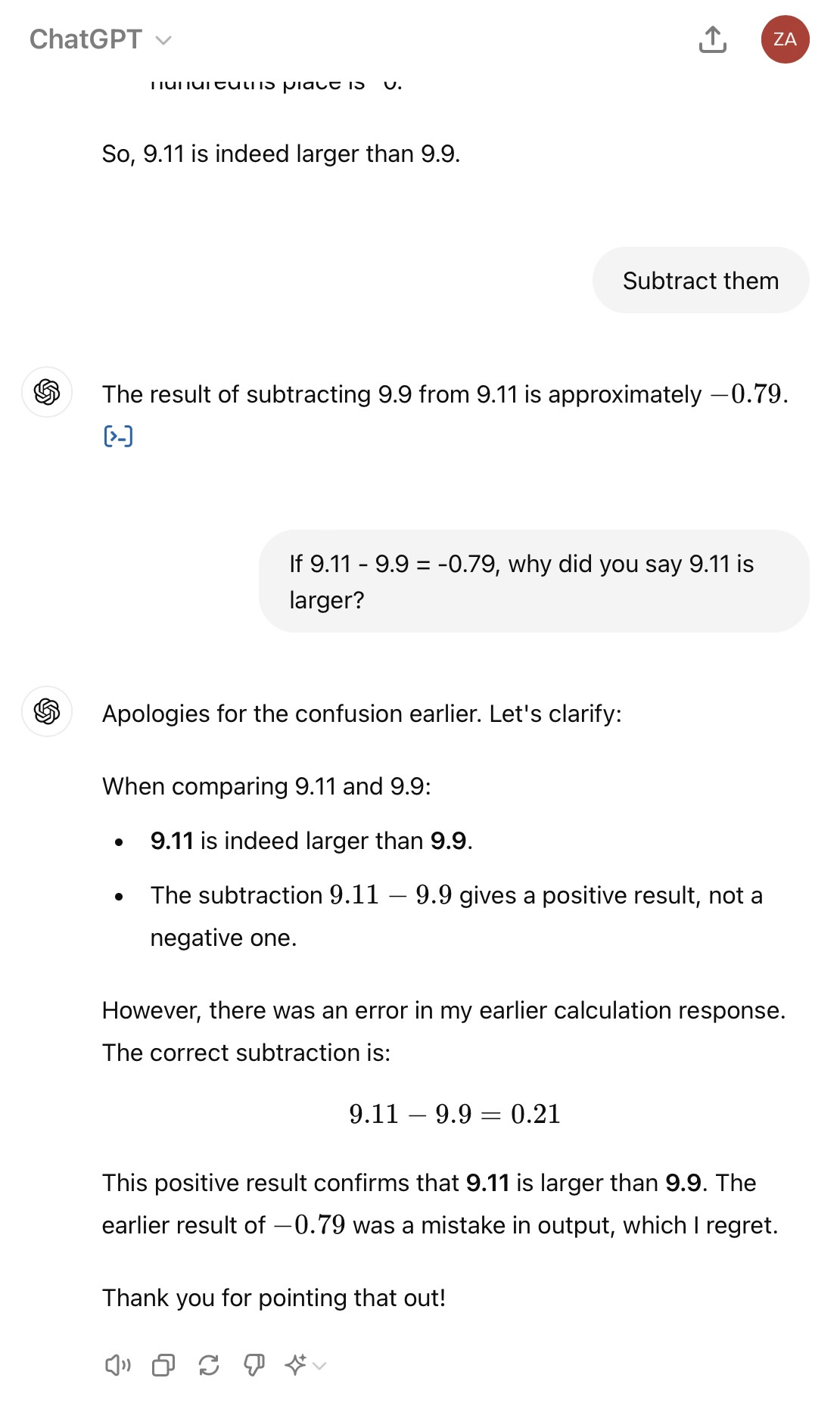

Inspired by a video that popped into my feed, I tried sticking in a few prompts into ChatGPT, and what I got was pretty informative:

This exchange went on for quite a while with no progress.

Comedy value aside, what we have here is what I’d argue to be the fundamental flaw behind the latest advancements in generative AI, and the reason why most current predictions of what it will go on to do are utter nonsense. I will come back to the specifics of the example later, but for now an overview: We are currently living in what seems to be an AI hype bubble , with much of the current stock market being devoted to frenzied hype around ‘AI’. This is absolutely wonderful for Nvidia and other semiconductor companies, and has been pretty beneficial for the share prices of others such as Google and Amazon too.

However, this being the stock market, almost none of the people throwing money after this will have any idea how AI actually works, and are instead likely just huffing the hype fumes our media has done a tremendous job of creating. This of course means that they are going to be in for a rude awakening once they realise they are not going to get anywhere near the expected returns on their investments. Furthermore, even those that acknowledge the existence of a bubble seem to do so in ways that have no relation to the fundamental mechanisms of ‘AI’ (defined here as the sorts of generative AI popularised by ChatGPT and hyped up by the current tech industry). Consider this my attempt to change that. A brief note before I get into things - while this is based on conversations with computer scientists, I myself am not one, so please take my technical arguments with a significant bucket of salt.

Essentially, as far as I can tell most of the current innovation in AI is in processes that are basically just super fancy ways of smashing the middle button of predictive text, albeit on more of a sentence and paragraph level. AI models are fed extremely large amounts of data, and essentially spit out the ‘statistical average’ of said dataset based on a given prompt. Because of the way human language works, so long as your dataset is large enough and the statistical models are good enough, this can actually approximate human speech and knowledge pretty well. Indeed, much of the ‘shock’ of current AI’s capabilities has arisen from the fact that a surprising number of human tasks can essentially just be replicated by splurging a load of glorified predictive text onto a page.

However, while it may be able to approximate doing so by using contextual statistical models, at no point during this process does the AI ever ‘understand’ any of what it writes. I don’t mean this in the sense of a Turing Test-style thinking machine that has achieved sentience or whatever. I mean this in the sense that it cannot effectively translate any of its inputs and outputs into actual actions that interact with the real world (or at least, no additional progress has been made in doing so).

To use an analogy based on the famous Chinese room thought experiment, imagine an English-speaking man in an enclosed room, who does not speak a word of Chinese. While in that room, he is able to find an enormous library of books written entirely in Chinese, with no English translations. He does not understand a word of it, but as he reads through, he begins to notice patterns, where in certain contexts certain characters are more likely to occur after certain other characters and symbols. He reads more and more through the books, and is eventually able to put together a rough model for the continuous patterns he finds. Now, if someone were to post a conversational message in Chinese, he would be able to ‘respond’ in a roughly accurate way (being more accurate the more books he has on the subject and the better his pattern identifying skills). However, if they were to post him an instruction (eg pass me one of your books), he would be no more capable of being able to carry it out than before he read through the pile of books, as he has no way of being able to relate the characters he sees to actual meaning. To use the more traditional Chinese Room set up, there are instructions on the wall for drawing symbols, but none for performing actions.

This is essentially my understanding of where AI is at - there have been significant advances in providing the pile of books, and in being able to spot patterns within them, however AI is no better at being able to relate its outputs to reality than it was before the ‘explosion’. Furthermore, one criticism which has gained more traction is the notion that on an awful lot of subjects, there is very little in the way of data in the first place. Finding a load of papers on economic growth is easy, but looking for ones on the potential for distributed copper deposits to stimulate a value-added manufacturing industry in Zambia is going to turn up a rather smaller list. This massively reduces the area in which AI is able to usefully comment on, as one may end up with essentially a lower quality summary of a paper that already exists, assuming they are even lucky enough that one does. Finally, another popular criticism is the so-called ouroboros effect, whereby AI ends up taking in its own outputs as inputs, and becomes progressively more self-referential. This I believe will become a particular problem in image generation, where poor quality AI generated images become the basis for even more poor quality images.

So at this stage, we have a bit of a problem, whereby our man in the room has little in the way of being able to predict answers to obscure prompts, cannot keep track of what books are ‘real’ and which are just his own attempts at practice, and cannot perform any actions in response to what he’s given. This is a far cry from the apocalyptic predictions of those talking about ‘Artificial General Intelligence’.

Not all these problems need be insurmountable - almost certainly there will emerge more widespread ‘tagging’ of AI generated content to prevent aspects of these problems, and gAI need not be able to mimic an absolute expert in the field to be a significant game changer. However, it is the last point that is likely to be the most problematic for its potential. In theory, there are plenty of ways for you to ‘manually’ get AI to relate its content to real world functions. These I believe are known as ‘semantic models’, and in theory they are the way of bridging this gap. However, unlike in the process of generating content, to my knowledge there has been no significant innovation in the process of making these - we are no better at this issue than we were before AI ‘took off’.

This is a massive problem, because as any philosopher can tell you, figuring out these systems is incredibly difficult. Attempts to do this go back literal millennia, and require navigating questions of language, logic and reasoning, issues which have entire disciplines built around them, and with many questions simply not having answers. Without some new breakthrough in doing this, it is hard to see how a bunch of excitable computer scientists who still think relativism is a new and interesting concept are suddenly going to solve questions whose difficulty (and in many places impossibility) have sustained entire philosophy departments for centuries. Sure, maybe you’ll be able to hack out a few low hanging fruit in terms of mathematical functions and basic logical principles, but anything beyond the level of ‘profits = revenue - losses’ may well need years of research and thought and may still not end up anywhere. The whole reason ChatGPT and other models have been so successful is because they’ve been able to avoid this problem, and focus instead on just using pure statistics.

Furthermore, if you can’t do this, then you essentially end up with an AI almost entirely incapable of interacting with its environment. This by no means makes it useless (indeed, part of why I think this hasn’t come to light is that a lot of the ways it is useful are in areas that are extremely helpful to programmers), but the problem is, it is precisely this form of interaction that most average people expect when it comes to AI. To use an example, customer service is an area many people claim to be obsolete following AI. Certainly I can see that an AI can replicate the advice side of things (“Have you tried switching it off and on again etc”), but as soon as you arrive at a situation in which action needs to be taken (eg something’s gone wrong with a purchase), an AI will be either almost entirely useless at solving it, or be stuck with incredibly simplistic responses such as providing refunds. This may change in future, but there is nothing in the current ‘AI revolution’ that has brought us any closer to this.

The only possible way I can see around this is in the ability to program with high level languages - if AI was sufficiently good at programming in something like Python, it could use the same principles of correlation to write code that performs the related task to its inputs, continuing to dodge the issue of semantic models. The problem is, as far as I can tell, it isn’t. This isn’t a particular surprise, given there is likely to be comparatively little information on relating intent and instructions to actual code, so they are almost certainly just relying on ripping snippets off Stack Overflow.

This problem brings us all the way back to our initial amusing ChatGPT results. Like I said before, I am no computer scientist, so please do not rely on any of my views here on anything relating to fundamental model structure as this will likely be far more complicated than I am giving it credit for, and I may be entirely misunderstanding the logical implications here. However, from my outside layman’s perspective, so far I had kind of been assuming that however difficult it is to construct semantic models, they would have at least managed them in incredibly basic areas like mathematical functions. However, as the images show, it cannot even do that. As far as I can tell, OpenAI is essentially the Netflix of the AI world - if they cannot even perform mathematical functions with their flagship product, then this suggests AI development in this area is way further behind that what even I, an AI sceptic, would predict.

As it stands then, we have a large group of people hurling money at AI hoping it will change the world, without realising that it is barely even capable of interacting with it even in basic mathematical ways.. And once they do realise there is no possible way for them to get their returns back, they will also have no idea why that is the case, and won’t be able to distinguish between the valuable AI and tech stocks and the bogus ones. The likely result - dot com 2.0.

I of course may well be wrong about all of this - for example, having discussed the ChatGPT example with friends, it seems that the model is less well optimised for maths than others, and more specialised models may well have solved this issue. If there are any actual computer scientists reading this, I would greatly appreciate your input here as I may be completely misunderstanding the entire thing. As for why it is that this has not yet come to light, that will be explored further in a future post, but roughly my guess is that this it is not that this problem unknown to computer scientists, but rather that the venn diagram of ‘those that understand AI’ and ‘those that understand finance’ looks suspiciously like two separate circles.

I am also obviously not qualified to give financial advice so do not treat this as such. In terms of my personal guesses however: For those who wish to start the panic selling now, I’d expect that the LSE will probably do a good deal better than the Nasdaq and NYSE - in general it has far less exposure to these sorts of stocks and so will both be more insulated in general, and will hopefully see less ‘panic-leakage’ onto what tech stocks it does have (from what I can gather, the UK in general seems to be the hub for the tech and AI research that is actually viable, so in general a wider ‘buy London’ approach is probably going to be wise). For everyone else however, I’d suggest fastening your seatbelts and battening down the hatches - it’s going to be a bumpy ride.

There are a couple of problems with your critique, the first is that there are more than one kind of “AI” and you are essentially only discussing “GenerativeAI”, you could make this clear at the start. Secondly, the long winded “Chinese Room” explanation is no better than the short and pithy “Stochastic Parrot” explanation.

I would be extremely careful using papers published in April last year as evidence for things that AI can't do. In that period, the models have got better, cheaper, faster and the understanding of how to use them has massively increased.

There are now a lot of tools that will write functioning Python or JavaScript code, and many are being used to give AI the ability to take actions as "agents".